Starting with sound, we can perceive it on a large range of meanings, from natural sounds, music, human created sounds, residual, industry, media sounds etc. It is to notice that they are all sounds coming from natural sources.

Sound, or what we extract as sound meaning, production and usage has changed and evolved with us, our activities and development, leading to inventions such as music and its different expressions. It is clear how post-modern society appreciates sound and music on its daily living. Our most important application of sound is of course communication and language, which is relevant to another study, so I will not talk about it in this lines unless explicitly referring to it.

Technology is another part of human development. In fact almost all life on post-modern men is bursting all of its energy through the technology/social/nature channels and constraints.

So, as time passes and complexity arises, new ways of sound creation and synthesis emerge from the technology we have developed following our own organic impulses and instincts, on a modern sophistication expression.

Music/Sound Technology in particular is what I am bringing together with these paragraphs. There is a big thing to consider in this topic though, and it is the distinction between sound considered as music, or the concept of music itself, and the rest of the sound, as a broader concept, and its uses, applications, synthesis, perception and effects of the situation it is produced in.

From the concept of music we can extract the characteristic of being pleasant to listen to, given its lyrical, acoustic, quality, technique, intellectual and sentimental content. These factors can interconnect, making the technique of playing musical instruments to cause sentimental bursts that are then expressed through lyrical musical means, and so on.

From this last point of view, more sounds other than music can be considered as joyful (or music), due to their content and arrangement of the parameters named above, and more. This means that not just musically created sound events can be enjoyable, but also sounds communicating other things or with different functions besides aesthetic ones.

Different reasons for creating sound arise from this thinking, such as communicating by whistling on the mountains or telling of an emergency with an alarm, counting time or announcing certain events. Some more sophisticated applications of sound creation can be related to hyper modernity, such as mobile phone ring tones, computer state sounds (earcons and auditory icons) (Cullen, 2006), industry specific sounds and all sounds we can and have the will to create to fit our requirements bounded by the technology at hand.

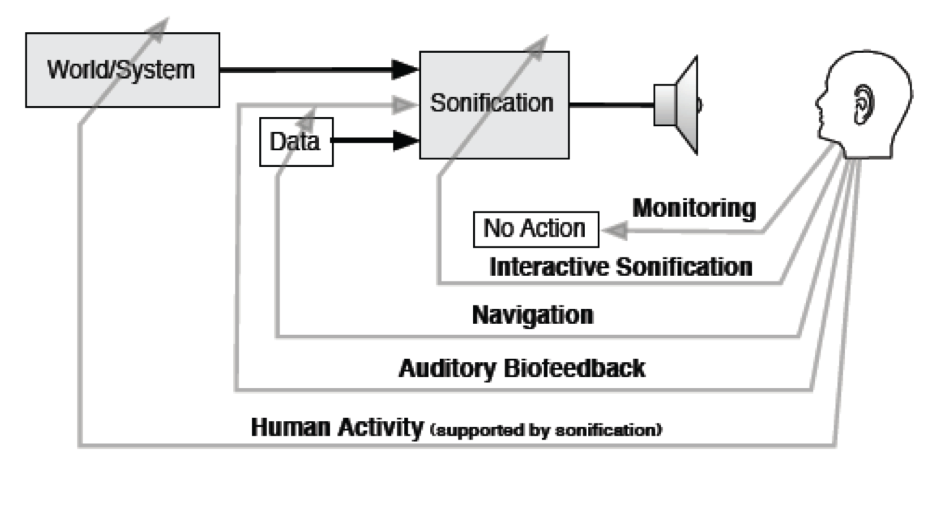

Another sophisticated use of sound, or a more specific definition to it, is what is referred to by the community as Sonification (Walker & Kramer, 2006), which is defined as the production of non-speech sound to convey data.

Data can be obtained directly from nature: trees, seas, animals or any phenomena we are interested in. A huge amount of information is thrown by millions of systems out there, some of them of human creation, as the economic structure, or the population of a city. Evolving technology is allowing sound/musical work to develop parallel to it, so these systems and their numbers can be transferred onto sound through these new technologies.

A new concept arises here, as there is obviously an information overflow coming into our hands. New ways of managing information are being created; there is so much information and so many ways of displaying it, and those information management solutions are tailored for almost every occasion. The term Information Architect was coined in 1975, and its meaning modified over time, until becoming a title for web applications and data arrangement (Morville, 2004), but it can well fit in this line of ideas.

Then, thinking there is plenty of information that can be represented in different ways and managed in various manners, sound can play a role to convey some of this information, creating what is known as Sonification. On a sense, Sonification can be seen as a product of Information Architecture, working as media to use and communicate a message, deliver information or manage it. As discussed on (Cullen, 2006) an auditory display should be able to convey any type of information and data that can be presented with visual methods.

This project aims to create a set of tools to work as a Sonification model, on an open, flexible and abstract manner, open to receive data from the most common generation format and storage protocol: spreadsheets. The sound processing software used as engine to create audio is MAX/MSP by Cycling 74. The Virtual Microphone Control (ViMiC) Spatialization technique is used to create spatial 3D audio illusions that allows us to use different sound sources and locate them on any three dimensional point on the listening space. The coordinates of the sounds’ positions can be mapped from complex data sets presented as spreadsheets, and the speed at which this data is read can also be mapped from spreadsheets; other characteristics of sound can be controlled in the same way.

The factors of these sound sources that are included on the system can be controlled from raw data stored in columns of numbers, such as the frequency of a given filter or a pure tone, the amplitude and, as described before, the space parameters of what is proposed to be a spatial audio density.

This last concept is considered when looking at the possibilities of working with raw data and an audio Spatialization technique. By creating coordinates’ boundaries within which random values for X Y and Z can be generated to locate a given sound on them, a certain volume is simulated. The volume and density control are explored in this paper in accordance with ideas expressed on (Saue, 2000), resembling the granular synthesis technique.

The proposed spatial Sonification is similar to the concepts written in (Winter, 2005) by Thomas Hermann, where a hierarchy and taxonomy of the sonification system design is shown.

On the other hand, the programming of the Spatialization technique application is based on the layered structure proposed by Peters et al. on (Peters, Lossius, Schacher, Baltazar, Bascou, & Place, 2009) using ViMiC and special communication protocols to OSC discussed on (Peters, Doctoral Thesis, 2010), (Peters, Matthews, Braasch, & Stephen, 2008), (Peters, SpatDIF, 2008) and (Peters, Ferguson, & McAdams, Towards SpatDIF, 2007).

In addition, to follow the community’s created or accepted standards so far for rendering 3D audio using ViMiC, more data can be mapped into the multichannel audio system, to control the amplitude or frequency and other sound components. This data can be treated as envelope functions developed on a signal or mathematical processing tool, such as MATLAB. This would lead to create, for instance a complex data set on MATLAB and map its output values into MAX/MSP, to control amplitude or other factors. The mathematical tool would model a movement, and store the numerical output on an .xls file that can be then read from MAX/MSP and poured into any controllable variable on the Sonification engine.

As stated on (Winter, 2005) these Sonification configurations open the doors to convey a great flow of information disposed on a highly multidimensional sonic array. Sound can display multidimensional spaces through the bursting of several sounds, each one with variables and parameters to map from data. Relationships, rhythms, behaviours, patterns can be uncovered, understood or observed with this approach, as said on (Walker & Kramer, 2006).

The following sections discuss on the theory of Sonification, Sound Spatialization and Acoustic cues considered to create a Model Based Sonification Engine using Virtual Microphone Control.

…

0. CONTENT