To develop this project, several acoustic phenomena are considered in the design and conception stages. The technique that is used to spatialize the sound uses binaural level and time differences to render its sound.

Interaural Time and Level Differnces (Rayleigh, 1876) (ITD & ILD), Head Related Transfer Functions (HRTFs) (Sima, 2008) are referred to as the main cues for us to identify sound sources’ positions. Basically, what happens is that sound travels at a fixed speed, which makes it arrive at different times to either ear, and with different volumes, stimulating aural perception on our brain, which leads to discerning of the position in space of the sound source and the distance to it.

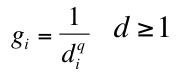

When synthesizing these aural cues, a simple model of the head is used to estimate the distance from the sound source (Stern, Wang, & Brown, 2006), and its position in the listening planes. The distance affects the amplitude decay of the signal as it travels through air, filtering the high frequencies of it. This intensity decay is modeled to follow the square distance rule (Peters, ViMiC, 2009)

Hence, the two ears, at slight different distance from the sound source, will have an intensity differential, (ILD), that works as location cue.

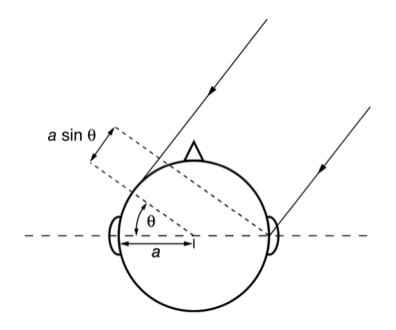

The same difference in distance from the sound source, as well as the shadowing effect of the head and upper body, creates time and frequency alterations on the sound we hear (Zhou). The model that is used to calculate this distances is the following

Considering that the wavelength of the sound plays an important role on its perception regarding the ITD, due to phase differences and aliases, (Stern, Wang, & Brown, 2006), the expressions for the time (phase) differences are derived:

(Stern, Wang, & Brown, 2006) for frequencies below 500 Hz , and

(Stern, Wang, & Brown, 2006) for frequencies above 2kHz.

…

Further on this chapter:

0. CONTENT

2.1 SONIFICATION

2.1.1 SONIFICATION DEFINITIONS AND CONCEPTS

2.2 SPATIALIZATION

2.2.1 ACOUSTICS INVOLVED IN SPATIALIZATION

2.2.1.1 COORDINATES SYSTEM

2.2.1.2 DELAY AND GAIN

2.2.1.3 REFLECTIONS

2.2.1.4 SOUND ACQUIREMENT

2.2.2 SPATIALIZATION TECHNIQUES

2.2.2.1 ViMiC

2.2.2.1.1 BASIC FUNCTIONING

2.2.2.2 JAMOMA

2.2.2.2.1 VIMIC MODULES

2.2.2.2.2 OUTPUT MODULES