With all the previous theory on Sonification, Sound Spatialization, and Acoustics, a program is coded in MAX/MSP that can use simple numeric data (with not so simple meaning though) to control different parameters of the sound and its position in the Spatialization rendering.

MAX/MSP works by creating patches of programs that contain functional objects used to create modify and process streams of signals and numerical data. These patches can also be interconnected so abstracted functions are used in more than one single patch. This means that, just as in ordinary programming languages, a hierarchical structure with parent and children objects and/or functions can be generated, sophisticating the type of systems that will ultimately control sound creation.

The idea is to bring sound files, here referred to as sound sources or just sources, and locate them somewhere on the space where the speakers are set. The illusion of these sources actually moving across the listening room will be accomplished by the usage of the ViMiC technique, that will distribute the sound of these sources on the speakers provided accordingly. These sources will be able to move with the aid of external MIDI controllers and this movement can also be automated via programs developed on Max/MSP.

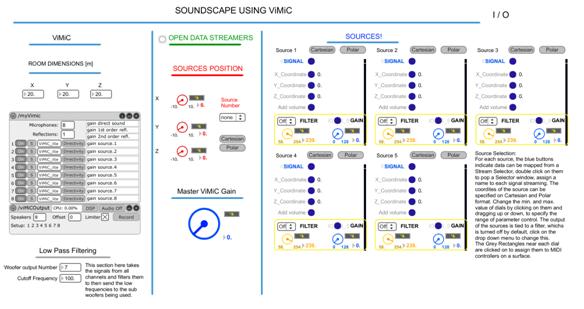

The main interface is divided in three parts, working with the ViMiC processing itself, the sound sources’ position and the sound source’s descriptions and parameters.

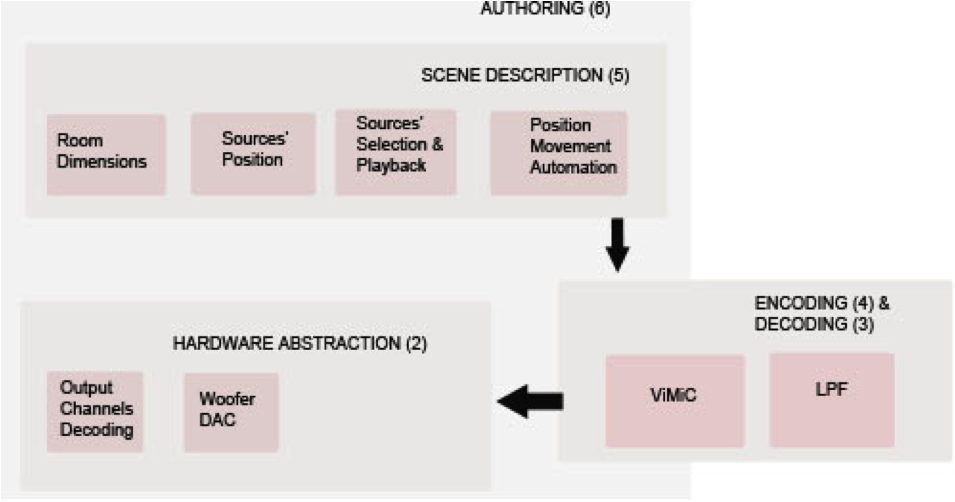

Some other sub patches are working as well with the main one, adding automatic movement to the selected source, and changing maximum and minimum values of the dials used across the programs. The structure of the Max patching follows the stratified approach for sound Spatialization proposed by Nils Peters et al. on (Peters, Lossius, Schacher, Baltazar, Bascou, & Place, 2009). This structure is shown on figure 12.

Figure 12. Structure of the soundscape application so far.

On it, the design of the system can be layered on matching functionalities, such as Authoring, Scene Description, Encoding/Decoding and Hardware Abstraction. This stratification helps future interoperability with other Spatialization applications.

The Authoring Layer, encompassing the Scene Description and Hardware Abstraction Layers, is defined as the software developments done to approach the end user; in other words, the interface through which the system is managed.

Layer 5 covers all definitions regarding the virtual space and sound sources created on the Spatialization system. Layer 4 and 3 are defined as the actual signal processing taking place that takes the data from layer 5 and renders it into audio signals then distributed to Layer 2, where the local audio reproduction tools treat them.

As said before, all the control knobs (gains, sources’ positions, etc.) are routed to be working with MIDI controllers; a patch found on the Max Help blog published by the University of Edinburgh lecturers (Parker, 2010) was used to make the program learn from the controller and automatically select the knob or slider to be used for each dial on the interface.

Figure 13. Interface for the main patch of the ViMiC application.

…

Further on this chapter:

0. CONTENT

4.2 DATA READING

4.4 STREAM SELECTORS

4.5 SCALERS

4.6 VOLUME MAKER

4.7 DATA READERS

4.8 PHYSICAL LAYER