A complete sonic experience involves sound events that we can locate on a specific point in the space we are listening. Different mechanisms in our body let us know about this positions in space, they work using the different timing on the sound arriving at both ears, as well as the difference in its intensity. These cues, our brain uses to locate sound sources, are called binaural cues in the sound Spatialization literature, they were first describe by Lord Rayleigh in (Rayleigh, 1876).

Sound Spatialization itself is a term being used to describe the group of techniques on sound processing applied when synthesizing or reproducing these sonic events on space. As Peters stated on (Peters, SpatDIF, 2008), there is a need of a standardization of terms used on the 3D audio applications. He and his colleagues are also responsible for an extension of the current Open Sound Control (OSC) format to include definitions to use on the Jamoma modules, which creates spatial sound rendering; I will come back to this later.

Standardization is pursued by proposing interchange protocol languages, such as SPATDIF, (Peters, SpatDIF, 2008) among Spatialization applications, so that creation has a common ground over which to construct and refer to coordinates systems to define sources and listeners’ positions.

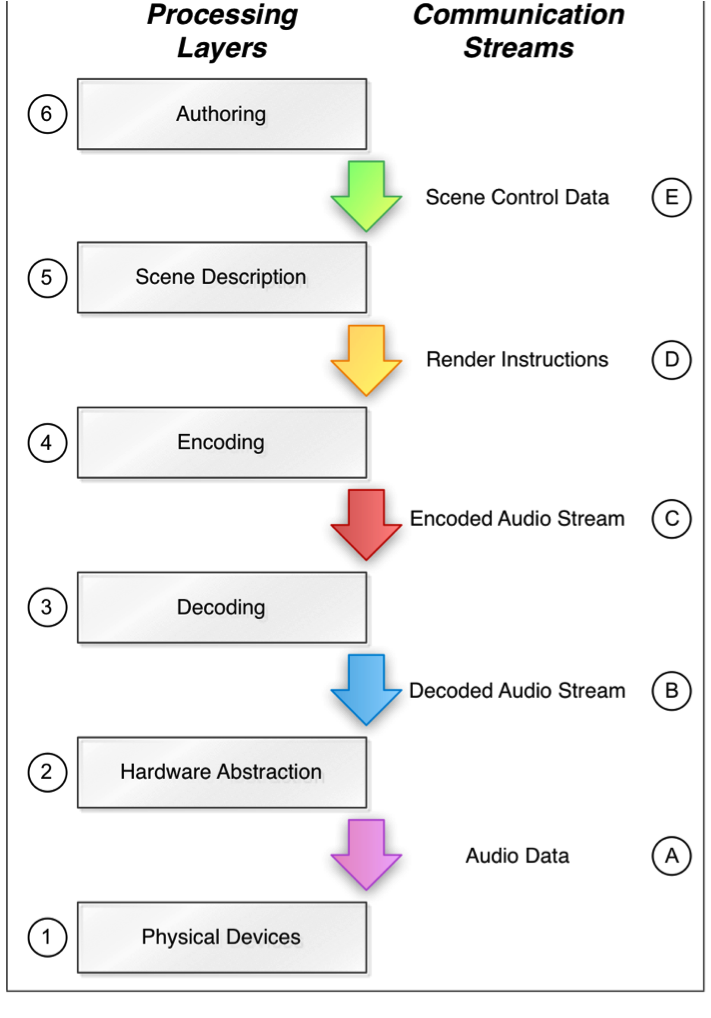

Other useful proposal, also from (Peters, Lossius, Schacher, Baltazar, Bascou, & Place, 2009), is the stratification of the composition or design on Spatialization systems. This stratification is based on the networking standards, that is to say the Open System Interconnection model (OSI model) which layers the networking communication systems in such a way that each of these layers produces and receives information on an abstract manner, allowing different applications and solutions to be used on each of these sections.

The layered structure proposed by Peters et al. is shown in the next figure. On it six separate sections describe different stages of the Spatialization systems’ design, which describe the application and interface (Authoring Layer), the description of the virtual space or scene, the methods of encoding this scene into raw data related to sound sources and its decoding considering the hardware at hand, the hardware management and finally the physical apparatus used to create sound.

…

Further on this chapter:

0. CONTENT

2.1 SONIFICATION

2.1.1 SONIFICATION DEFINITIONS AND CONCEPTS

2.2 SPATIALIZATION

2.2.1 ACOUSTICS INVOLVED IN SPATIALIZATION

2.2.1.1 COORDINATES SYSTEM

2.2.1.2 DELAY AND GAIN

2.2.1.3 REFLECTIONS

2.2.1.4 SOUND ACQUIREMENT

2.2.2 SPATIALIZATION TECHNIQUES

2.2.2.1 ViMiC

2.2.2.1.1 BASIC FUNCTIONING

2.2.2.2 JAMOMA

2.2.2.2.1 VIMIC MODULES

2.2.2.2.2 OUTPUT MODULES