2.2.1.2 COORDINATE SYSTEM.

For the model that is proposed on this paper, the coordinate system need to be selected and describe, so that the language can be fluent when referring to spatial places with relation to the listener.

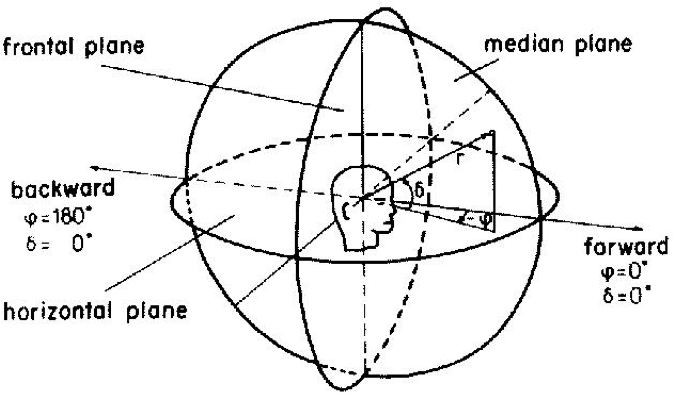

On a Sound Spatialization environment, the listener perceives sound on a first person perspective, so the terminology used to refer to the position of the sound in the 3D space is described with respect to the listener. In this paradigm there are three planes that can be used to locate an event around the listener; these are frontal, median and horizontal planes. Altogether these are referred to as the Head Related Coordinate System, and on it we base the vocabulary we use further to describe positions around the head.

The figure depicts a sphere around the head, so positions can be described (within this sphere) using two reference systems: Cartesian (x,y and z) or polar. The later measures two angles and one distance an object will have to describe to be on a certain point of space. The first angle being the one moving on the horizontal plane, called Azimuth, and the second, called Elevation, elevating the object, from the stated point on the horizontal plane, attached to a constant distance, or radius.

For the case of the model studied on this paper, both reference systems can be used, and as will be seen later, controlling them in their characteristic movement of coordinates yields a substantial difference on the perception of the location of sounds on space.

…

Further on this chapter:

0. CONTENT

2.1 SONIFICATION

2.1.1 SONIFICATION DEFINITIONS AND CONCEPTS

2.2 SPATIALIZATION

2.2.1 ACOUSTICS INVOLVED IN SPATIALIZATION

2.2.1.1 COORDINATES SYSTEM

2.2.1.2 DELAY AND GAIN

2.2.1.3 REFLECTIONS

2.2.1.4 SOUND ACQUIREMENT

2.2.2 SPATIALIZATION TECHNIQUES

2.2.2.1 ViMiC

2.2.2.1.1 BASIC FUNCTIONING

2.2.2.2 JAMOMA

2.2.2.2.1 VIMIC MODULES

2.2.2.2.2 OUTPUT MODULES