This project consists of a simple Android Application that implements an algorithm for a Variable Order Comb Filter.

Code in GitHub

The Application reads a short WAV file from its resources, reproduces it and filters it, using a toggle button to turn the filtering on or off. It also uses a slider to select the order of the Comb Filter.

For this to occur, a framework has to be built in Android, specifically in Java, to create a running Activity, which in turn creates an object of a new class that will be running as a separate Thread.

This is because, a new Thread has to be created and running parallel to the main Activity, which is controlling the GUI. This parallel Thread is where the Audio Playback happens. Otherwise, if the audio process was to happen in the main Activity, controlling the GUI, this latter one has to wait for the audio process to finish in order to continue with its own tasks.

Considering this, following is a description of what the MainActivity.java file contains.

In a nutshell, the WAV file is read into the music bytes array, converted into the music2Short shorts array, processed and the result stored into the musicFiltered shorts array. Finally, depending on whether the Filter On/Off toggle button of the GUI is pressed or not, the audio will be reproduced by writing either musicFiltered or music into audioOut. This last object is an instance of the class AudioTrack, an Android native class that receives bytes or shorts arrays to reproduce them according to the parameters of its initialization.

For this case, the app is choosing to STREAM_MUSIC, at a sample rate of 44100 Hz, MONO, in PCM 16 bits, among other parameters.

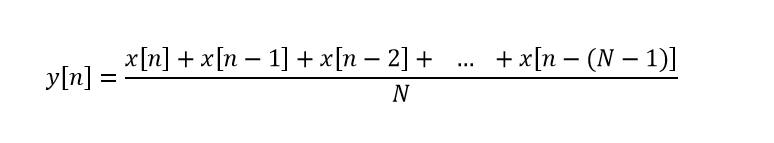

A comb filter is programmed by taking the first frame of the audio file, with a size equal to the length of music. The output is computed in the following way

Where N is the order of the filter, y[n] is the current output to compute and x[n] is the current input sample.

Implementation, Results, Comments & TODO list.

The overall implementation of the filter is successful, the application can handle high order of filters, and it is coded up to 102 taps. The Filtering can be turned on and off via the GUI, and the order of the filter can be changed in real time from 1 to 102, with no noticeable delay.

Though it is to note that there are packet losses from time to time, especially when the Android Device is doing something else, which demands more memory. This is perhaps the first thing to look at for improvement.

I tried to implement a convolution of the audio file with an impulse response, without much success, given the computation intensity of the convolution, the application could not handle this. For this I reckon it is necessary to go deeper and implement an interface to native C++ code, to speed up the processing for a convolution, or other computations of the kind of FFTs and so forth.

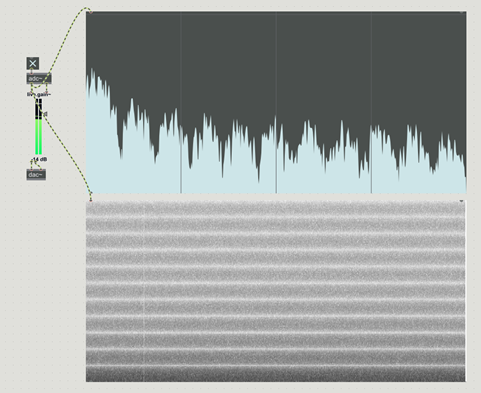

In the following picture we can see the tests ran to see the Frequency Response of the application when playing White Noise, at 44.1 kHz, and 16 bit resolution PCM samples, and filtering with a 23rd order Comb Filter. Characteristic lobes are easily observable, as well as a discontinuity in the bottom spectrogram, to the left, where some packet(s) loss happened.

Some other improvements could be to be able to select from different audio files to playback. Currently the application is hardcoded to play one single file, but from within the code, there is the option of only comment or uncomment 3 lines to select from 3 files

- White Noise

- Rhapsody in Blue, not the best for perceiving change in Frequency Response.

- Symphonix, techno music rich in frequencies for Frequency Response perception.

The application was developed using:

- Samsung Tablet GT-N5110, a.k.a Note 8”, running Android Kit Kat, version 4.4.2. In this device the application ran almost without lag.

- ZTE P736E Vivacity T-Mobile SmartPhone, running Android Gingerbread, version 2.3.5. Noticeable, catastrophic lag in this device.

Finally, as conclusion, this starting framework is very useful to build up and create more complex Audio Processing systems in Android. Things to develop further are:

- Pass-through applications, where the microphone is used as input, the audio processed and then played back.

- Possible problems: Latency, and necessity of lower level native C++ programming.

- Convolution of audio from Microphone or audio files

- Possible problems: Latency, and necessity of lower level native C++ programming.

- Implementation using the Networking and Bluetooth Stacks, in order to make system more versatile and with multichannel capabilities.

- Other type of filtering and processing, such as Multiband Equalizing.

- The application currently has a bug, due to which, the audio is reset when the application comes to the foreground after being in the background (i.e. when another app comes forward, leaving this app behind.) This has to be accounted for with the Application Lifecycle methods mentioned earlier.

Possible applications range within the following (not restricted to):

- Sound spatialization. 3D, binaural Audio.

- Multichannel compositions, and rendering of, say, soundscapes.

- Art installations.

- Museum tours.

- Augmented reality.

- Non-Audio signal processing using audio hardware, feeding of voltages to electronic circuits, transmission of data, etc.

- Monitoring of non-audio signals, in automation purposes.

As seen, the main problem is the fact that Android’s programming language is Java, which is quite high level, abstract, which in turn makes it slower. The solution is to implement critical algorithms in native C++.